Intel Core 2 Duo E6700 Review

Saturday 7 May 2011 22:47

Posted by _Blogger_

The good: Most cost-efficient chip on the market; low power consumption makes it cooler, quieter, and easy to use in smaller PC designs.

The bad: Chipset politics between Intel and graphics card vendors hurt gamers, who now have to pick an Intel board for ATI's CrossFire or an Nvidia board for SLI cards.

The bottom line: Intel's Core 2 Duo E6700 offers the best price-to-performance ratio we've seen in a desktop chip. For half the cost of AMD's top-of-the-line chip, you get identical if not superior performance and better power efficiency. AMD surprised us last year with its completely dominant dual-core chips, but Intel regains the crown with Core 2 Duo.

Review: AMD, you've had a good run.

Intel announced its line of Core 2 Duo desktop CPUs today. If you're buying a new computer or building one of your own, you would be wise to see that it has one of Intel's new dual-core chips in it. The Core 2 Duo chips are not only the fastest desktop chips on the market, but also the most cost effective and among the most power efficient. About the only people these new chips aren't good for are the folks at AMD, who can claim the desktop CPU crown no

... Expand full review

AMD, you've had a good run.

Intel announced its line of Core 2 Duo desktop CPUs today. If you're buying a new computer or building one of your own, you would be wise to see that it has one of Intel's new dual-core chips in it. The Core 2 Duo chips are not only the fastest desktop chips on the market, but also the most cost effective and among the most power efficient. About the only people these new chips aren't good for are the folks at AMD, who can claim the desktop CPU crown no longer.

We've given the full review treatment to two of the five Core 2 Duo chips. You can read about the flagship $999 Core 2 Extreme X6800 here and the entire Core 2 Duo series here. In this review, we examine the next chip down, the $530 2.67GHz Core 2 Duo E6700. While the Extreme X6800 chip might be the fastest in the new lineup, we find the E6700 the most compelling for its price-performance ratio. For just about half the cost of AMD's flagship, the $1,031 Athlon 64 FX-62, the Core 2 Duo E6700 gives you nearly identical, if not faster performance, depending on the application.

As we outlined in a blog post a month ago, the Core 2 Duo represents a new era for Intel. It's the first desktop chip family that doesn't use the NetBurst architecture, which has been the template for every design since the Pentium 4. Instead, the Core 2 Duo uses what's called the Core architecture (not to be confused with Intel's Core Duo and Core Solo laptop chips, released this past January). The advances in the Core architecture explain why even though the Core 2 Duo chips have lower clock speeds, they're faster than the older dual-core Pentium D 900 series chips. The Core 2 Extreme X6800 chip, the Core 2 Duo E6700, and the $316 Core 2 E6600 represent the top tier of Intel's new line, and in addition to the broader Core architecture similarities, they all have 4MB of unified L2 cache. The lower end of the Core 2 Duo line, composed of the $224 E6400 and the $183 E6300, have a 2MB unified L2 cache.

We won't belabor each point here, since the blog post already spells them out, but the key is that it's not simply one feature that gives the Core 2 Duo chips their strength, but rather it's a host of design improvements across the chip and the way it transports data that improves performance. And out test results bear this out.

On our gaming, Microsoft Office, and Adobe Photoshop tests, the E6700 was second only to the Extreme X6800 chip. Compared to the 2.6GHz Athlon 64 FX-62, the E6700 was a full 60 frames per second faster on Half-Life 2, it finished our Microsoft Office test 20 seconds ahead, and it won on the Photoshop test by 39 seconds. On our iTunes and multitasking tests, the E6700 trailed the FX-62 by only 2 and 3 seconds, respectively. In other words, with the Core 2 Duo E6700 in your system, you'll play games more smoothly, get work done faster, and in general enjoy a better computing experience than with the best from AMD--and for less dough.

MWC 2011: LG overview

22:41

Posted by _Blogger_

Press conference

The LG press conference at the start of MWC 2011 saw the official announcement of the Optimus 3D and Optimus Black smartphones and the 8.9" tablet Optimus Tab.

MWC booth

Of course we also stopped by the LG booth at the MWC itself, where we could spend some more quality time with the new devices.

As you can see from the photos the new announcements generated quite a lot of interest. Still we managed to fight our way through the crowd and got our geeky hands on all of them.

Understandably, we started with the Optimus 3D. Join us after the break for a load of live images, a hands-on video and a real 3D video captured with its cameras. Plus we've got its impressive Quadrant benchmark performance.

Samsung Galaxy S II vs LG Optimus 2X

22:39

Posted by _Blogger_

Introduction

Ah, the power of dual-core processors – it lets you do cool stuff like buttery-smooth multitasking, exciting 3D portable gaming or Full HD videos. The latest Samsung I9100 Galaxy S II packs the new Exynos chipset, which pairs a couple of Cortex-A9 cores with a Mali-400MP GPU. Sounds like a potent mix – at least on paper, so we’re eager to pit it against one of the first phones to offer Nvidia’s Tegra 2 platform - the LG Optimus 2X.

What we have in our hands is a pre-release test unit of the Galaxy S II, successor to one of the best selling Android phones. Ours runs its two CPU cores at 1GHz but Samsung announced that the speed will be bumped up to 1.2GHz.

Once that’s done, the I9100 Galaxy S II would be the most powerful droid – until the competition catches up, which won’t take long judging by our rumor mill. Anyway, we’re going to use the chance to put Samsung’s Exynos chipset head to head with NVIDIA’s Tegra 2. We’ll be using the LG Optimus 2X for the benchmarks, which runs at the same clock speed as our Galaxy S II – 1GHz.

We’re already working on our Galaxy S II preview but until that’s done, we just couldn’t resist testing some of the most interesting new features of Samsung’s latest flagship. Here’s what this comparison will be about.

First off, this is our first encounter with the Super AMOLED Plus technology, which improves on an already excellent screen. We’ll run the new display through several tests to determine how it stacks up against the old one and other leading displays on the market.

Next, we’ll get to the camera department – the Galaxy S II comes with an 8MP still shooter that can capture 1080p FullHD videos. That’s our second encounter with such a beast and we’ll be pitting it against the LG Optimus 2X camera, that’s for sure. A surprising guest star in the camera test is the Sony Ericsson Arc, which we recently reviewed as well.

After that comes the test of what makes the Samsung I9100 Galaxy S II tick – the new dual-core Exynos chipset. Just keep in mind the Galaxy S II retail version will have 20% more clock speed for each of the two CPU cores.

Finally, we’ll wrap things up with a real-life performance test – how does the Galaxy S II stack up in real world tasks (we’ll be testing the user interface, web browser and games) against a phone with a Tegra 2 chipset (which is quite popular among new smartphones and even tablets).

Ready? Then let’s jump to the next page – but be warned, you are in for some serious display envy.

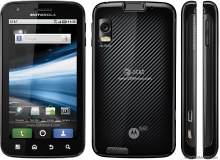

Motorola Atrix 4G review: Enter the Atrix

22:35

Posted by _Blogger_

Introduction

It was the alliance with Android that put Motorola out of the woods. Like every partnership, it’s been a series of peaks and dips but every now and then the relationship between Motorola and Android goes beyond a mere marriage of convenience and well into a simmering love affair.They did it with the MILESTONEs and the DROID X, the BACKFLIP and the DEFY. With the ATRIX 4G, Moto says it has no plans to live in the shadows of other big makers.

Motorola ATRIX 4G official photos

The Motorola ATRIX 4G is the first dual-core smartphone in the Motorola line-up. It’s also the first to flaunt a qHD touchscreen. That’s as solid as credentials get. Add the fact it’s the first handset for Motorola to support the fast HSDPA+ network (hence the 4G moniker) and you’ve got yourself a Droid that’s not afraid of what comes next.

Dual core is certainly the next big thing in mobile phones and the Motorola ATRIX deserves credit for being among the first – our bad really, this review isn’t exactly on time.

But there are other bold decisions that Motorola had to make. The HD and laptop docks for one – though the concept is not exactly original, Motorola is trying to make it mainstream. The added fingerprint scanner is not new either but well forgotten old does just as well. Plus, it will satisfy the privacy freak in all of us.

Anyway, the standard package is what we’re interested in and this is what our review will focus on. The optional extras can wait. The ATRIX is more important to us a phone (a dual-core smartphone, to be precise) than a wannabe laptop or a potential entertainment dock. Let’s waste no more time and take a glimpse of the ATRIX 4G’s key features.

Key features

- Quad-band GSM and dual-band 3G with HSDPA and HSUPA

- 4" 16M-color capacitive touchscreen of qHD (960 x 540 pixels) resolution, scratch-resistant Gorilla glass

- Dual-core 1GHz ARM Cortex-A9 proccessor, ULP GeForce GPU, Tegra 2 chipset; 1GB of RAM

- Android OS v2.2; MOTOBLUR UI (update to Gingerbread planned)

- Web browser with Adobe Flash 10.1 support

- 5 MP autofocus camera with dual-LED flash; face detection, geotagging

- 720p video recording @ 30fps (to be upgraded to 1080p Full-HD )

- Wi-Fi ab/g/n; Wi-Fi hotspot functionality; DLNA

- GPS with A-GPS; Digital compass

- Fingerprint scanner that doubles as a power key

- 16GB storage; expandable via a microSD slot

- Accelerometer and proximity sensor

- Standard 3.5 mm audio jack

- microUSB port (charging) and stereo Bluetooth v2.1 with A2DP

- standard microHDMI port

- Smart and voice dialing

- Office document editor

- Active noise cancellation with a dedicated secondary mic

- DivX/XviD video support

- Lapdock and HD Dock versatility

- Web browser with Adobe Flash 10.2 support

Main disadvantages

- Not the latest Android version

- No FM radio

- Screen image is pixelated upon closer inspection

- Questionable placement of the Power/Lock button

- Poor pinch zoom implementation in the gallery

- No dedicated shutter key

- Doesn’t operate without a SIM card inside

Motorola ATRIX 4G live photos

Garnish all this premium hardware with a 5MP camera with dual LED flash and a 4-inch capacitive touchscreen of qHD resolution of 540x960 pixels, and the ATRIX 4G is more than ready to play with the other dual-core kids.

As for us, we are about to take a closer look at the design and build of the phone and find out if it matches the premium hardware that resides within.

HTC Desire S review: Droid cravings

22:33

Posted by _Blogger_

Introduction

Powerful hardware, large high-res screen and the latest Android version in a single piece of solid metal – the recipe did wonders for the original Desire so no wonder HTC are in no mood to experiment with the sequel. Take the best and make it better pretty much sums up the game plan. Oh well, we’ll take quietly brilliant even if emphasis is sometimes on quiet. In other words, the Desire S is a phone we’re ready to like. But make no mistake – it’s not meant to be the flagship its predecessor was.

HTC Desire S official photos

HTC has the Sensation to send against the heavyweight competition. The new Desire is given a different, though no less important role. Ideally, it should be the smartphone that has broader appeal, the one to offer as reward to loyal upgraders. The phone to give you – wait for it – more bang for your buck than we’ve come expect from HTC.

Here’s what it puts on the table summarized.

Key features

- Quad-band GSM and dual-band 3G support

- 14.4 Mbps HSDPA and 5.76 Mbps HSUPA

- 3.7" 16M-color capacitive LCD touchscreen of WVGA resolution (480 x 800 pixels)

- Uses the best screen from HTC so far (along with the Incredible S)

- Android OS v2.3 Gingerbread with HTC Sense

- 1 GHz Scorpion CPU, Adreno 205 GPU, Qualcomm Snapdragon MSM8255 chipset

- 768 MB RAM and 1.1 GB ROM

- 5 MP autofocus camera with LED flash and geotagging

- 720p video recording @ 30fps

- Wi-Fi b/g/n and DLNA

- GPS with A-GPS

- microSD slot up to 32GB (8GB card included)

- Accelerometer and proximity sensor

- Standard 3.5 mm audio jack

- Stereo FM radio with RDS

- microUSB port (charging) and stereo Bluetooth v2.1

- Smart dialing, voice dialing

- Front facing camera, video calls

- DivX/XviD video support

- Compact aluminum unibody

- Gorilla glass display

- HTC Locations app

- HTCSense.com integration

- HTC Portable Hotspot

- Ultra-fast boot times (if you don’t remove battery)

Main disadvantages

- No dedicated camera key and no lens cover

- Poor camcorder performance, jerky 720p videos

- Below-par sunlight legibility

- Wi-Fi signal degrades when you cover the top part of the back panel

- microSD is below the battery cover

Video-chat enthusiasts will cheer the front-facing camera, while those who want lots of apps installed on their smartphones will appreciate the extended built-in memory.

The HTC Desire S at ours

The bad news is the Desire S is – in more than one way – running against the clock. A year is a really long time in cell phone terms and there’s no guarantee the updates are enough to make it competitive in a market that’s embracing dual-core and pushing beyond the 1GHz mark.

The easiest way to dispel the doubts would be to sail smooth through this review. So why wait – unboxing coming up right after the break.

Nvidia GeForce GTX 580

10:00

Posted by _Blogger_

In early 2010 Nvidia released their GTX 400 series of graphics cards for the hardcore gamers out there. However, just six months later they released the Nvidia GeForce GTX 580, the first model in the 500 series and the new flagship graphics card for this outstanding company. Much like the 480, this model uses DirectX 11 and has a single GPU. Most of the primary features that affect the 3D rendering and speed were updated to meet today's standards and the reality is that the GTX 580 is one of the fastest and best-performing graphics cards on the market. Because of this it received our TopTenREVIEWS Gold Award for overall power and performance.

GPU/Interface:

Nvidia chose to stay with the single GPU for the GTX 580, which is by no means a bad choice; they have been making this type of video card for years and know exactly what they are doing. This is by far the most powerful single-GPU card on the market when it comes to overall power output and benchmark tests. If you compare this card to that of the older 480 model you will notice that the specs are slightly higher across the board and that the power needed to run the card is slightly less than most of the competition. The transistor count was upped ever so slightly from their previous model and still outnumbers that of any card on the market.

Video Memory:

When it comes to finding a graphics card that will be able to play any game at top performance it's important to look at the clocked memory and overall memory. The Nvidia GTX 580 has the highest clocked memory speed we've seen – 2004 MHz – which even outperforms any dual-GPU cards on the market today. You are going to love the 1.5GB of memory because this will ease the amount of RAM needed to render the intense graphics of games like "COD: Black Ops" and other graphics-intensive games.

The memory in this graphics card is GDDR5, which is the new standard for any card that wants to compete with other high-end gaming hardware. The memory bus width did not change from the 480 model and stayed at 384-bits, which should still do just fine compared to most graphics cards on the market today. An amazing 192.4 bandwidth was added which comes very close to dual-GPU cards.Display Interface:

The Nvidia GTX 580 is like most other graphics cards from Nvidia and simply offers the connections that are most common. In this case they offer dual-channel DVI, Mini-HDMI 1.3 and also support VGA. Dual-channel DVI is by far the most common connection type and pretty much makes up for the omission of an HDMI connection. This card has the type of connections that are in demand and not a lot of extra ones which can be nice if you don’t know a lot about graphics cards and want to install your own.

Supported API:

With the release of Windows 7 also came the very important DirectX 11 update to graphics cards. Nvidia's first card to offer this DX 11 was the 480, so it was no surprise to see their replacement model with the same technology. The reality is that both of these cards use Fermi architecture based on CUDA and also use DirectX 11 for superior tessellation, which has always been one of Nvidia's strongest areas and will most likely stay that way.

Rendering Technologies:

The Nvidia GTX 580 graphics card is the second series of models to use what Nvidia likes to call Fermi; this is basically what CUDA was but with greater potential. CUDA allowed for higher performance, a wider array of applications to run at once, full-spectrum computing and just about anything else in terms of computing power. Now take that and add in the capability to work with everything DirectX demands and you have the new and improved Fermi. This technology is designed to aid developers, allowing them to create even better games for all of us to enjoy.

One of the main areas that Nvidia focuses on with their graphics cards is tessellation. This technology basically allows complex, intricate images such as clothing, water and fire to render faster. Things that have a lot of moving parts such as hair or grass are much harder to render because there are thousands or even millions of objects that have to move according the wind or the movement of an object. Tessellation makes it possible for each blade of grass or flame in a fire to act like it would if you were to see it in real life. This technology is always improving and will always be a huge since games will continue to become more lifelike and even more detailed.The ability to run multiple screens at the same time is another thing that most gamers and game developers need. This graphics card only allows for three displays which is enough for most gamers out there but will fall short for those developing the games of the future.

Additional Features:

All Nvidia cards can support up to 3 GPUs at the same time and for the most part this is about all you would ever need for any system. The graphics card itself is cooled by a fan and is also cooled by the fans or liquid cooling system installed into your machine; the combination should have no problem keeping this card at a reasonable temperature. The card is quite thick at 4.4 inches high which will take up 2 slots of most mother boards; keep this in mind if you are planning on adding two cards to your system.

Summary:

When push comes to shove, the Nvidia GeForce GTX 580 is going to beat out just about every other graphics card. In terms of tessellation, there is no graphics card on the market that can outdo the GTX 580. We would love to see Nvidia add a dual-GPU card to their lineup, but we're more than confident that this card will impress any gamer out there. Now if you want to really destroy the competition you can link two 580s together with Nvidia's own SLI multi-GPU solution; that would outperform anything on the market without even breaking a cyber-sweat.

Pros: The updated memory clock speed and use of Fermi technology make this card exceptional.

Cons: It only has a single GPU.

The Verdict: The fastest single GPU on the market hands down.

Screenshots:

ATI Radeon HD 5970 Review

08:54

Posted by _Blogger_

The flagship of the ATI Radeon 5000 series is the ATI Radeon HD 5970. This card is quite the performance beast. Many have benchmarked this card alongside other top performers and it reigns supreme. We rated this card higher than the others because of its overall superiority. Even with the recent release of Nvidia’s 500 series card, it has stood its ground. Nvidia may have another 500 series graphics card up their sleeve to challenge the 5970, but for now Radeon product has no equal which is why it recieved our TopTenREVIEWS Gold Award.

There are a total of 3200 stream processors in this card, which gives it an acceleration edge over the competition. With a setup like this, you have fill rates of 46.4GPs (gigapixels) and 116GTs (gigatexels or texture pixels) to help get your FPS (frames per second) up, giving you a smooth gaming and video rendering experience.

The 75x20mm fan draws the heat off the heat sink and pushes it out through the back of the card. The fan operates relatively quietly with a low 42 watt idle consumption rate. While gaming, the fan will pick up the pace and can use up to 294 watts, but even at full rotation, it doesn't put out any kind of intolerable jet-engine sounds. Some other cards have had issues with load noise while under a load, but that shouldn't be a big deal with the 5970.

Concealing the heat sink, fan and the rest of the graphics card is the recognizable Radeon HD 5870 design. AMD probably went with this setup because it helps protect the card very well. It is fairly similar to the housing that Nvidia has been using for a while now.

The 5970 is a beefy graphics card and requires a fair amount of energy. To power it, AMD added a 6-pin and 8-pin pair of PCI Express power connectors. Previous dual-GPU graphics cards have used the same setup. Dual 6-pin connectors have been the most common standard for single GPUs, but it seems the additional GPU requires a little more. It's important to know this so you make sure to get a compatible power supply.

GPU/Interface:

The Radeon HD 5970 is a dual core graphics card like Nvidia’s GTX 295. It’s a fairly new idea, but seems to be well received, and may even be the future for all graphics cards. You could out perform this card with a pair of 5870s or GTX 480s, but the cost is prohibitive.

Having a dual core graphics card essentially means that you have a CrossfireX (dual card) system, but in this case, like the GTX 295, it’s all in one card. With this one card, almost every attribute is doubled. A good example of this is the core clock speed, which is 725 x 2MHz, theoretically giving you 1450MHz. For this reason the 5970 has quite an impressive set of GPU and memory specs.There are a total of 3200 stream processors in this card, which gives it an acceleration edge over the competition. With a setup like this, you have fill rates of 46.4GPs (gigapixels) and 116GTs (gigatexels or texture pixels) to help get your FPS (frames per second) up, giving you a smooth gaming and video rendering experience.

Video Memory:

The 5970 has the latest in video memory, GDDR5. In the stock configuration, they run at an impressive frequency of 1.0GHz x 4, however, they are rated for 1.25GHz operation, which gives you room to overclock. The reason it is underclocked is because it is pushing the PCIe 300 watt limit already, sitting maxed at 294 watts.

There is a 2GB capacity on this card, which should ease your RAM usage and provide some extra speed. More and more, less RAM is necessary on the motherboard if you have a good graphics card. If that's the case, then it'll probably be more than gamers and video rendering employees who get the nice graphics cards like the Radeon HD 5970.Supported API:

Among The Radeon HD 5000 series graphics cards' best features is the presence of DirectX 11 support. With the release of Windows 7 came DirectX 11, and AMD jumped on board quickly. Until the recent release of the GTX 400 series, Nvidia didn't have anything to rival these cards. It's true that there isn't much in the way of content for DX 11 yet, but that still put the 5000 series ahead of the game and ready to move forward. Although the current Nvidia flagship, the GTX 480, doesn't have the power of the Radeon HD 5970, it still put them back in contention with the rest of the ATI graphics cards.

Rendering Technologies:

The ATI Radeon HD 5970 can support a maximum resolution of 2560 x 1600 on not one, but rather, three monitors. This ability is due to AMD's Eyefinity technology. Although the other 5000 series cards have the same technology available, the 5970 has real potential in this area. In fact, to really test the capacity of the 5970, it has been suggested that you'd have to run it on three 2560 x 1600 resolution monitors. That's some serious power. There are even some dual 5970 CrossFireX systems that can do far greater resolutions across 6 monitors. That's probably overkill for the average consumer, but it's fun knowing that you have that ability.

Additional Features:

This graphics card is really quite large, even when compared to other dual GPU cards. It is over 12 inches long, nearly 4 inches wide and about an inch and a half tall. The size obviously suggests some weight, and sitting at around 3.5 pounds, it's one of the heaviest graphics cards on the market.

Sitting on the GPUs is a large aluminum heat sink made up of 36 fins. It is roughly half the size of the card. The heat sink helps cool not only the GPUs but also the built-in memory. It's designed this way for efficiency, especially with the help of the highly heat-conductive copper plate on the bottom.The 75x20mm fan draws the heat off the heat sink and pushes it out through the back of the card. The fan operates relatively quietly with a low 42 watt idle consumption rate. While gaming, the fan will pick up the pace and can use up to 294 watts, but even at full rotation, it doesn't put out any kind of intolerable jet-engine sounds. Some other cards have had issues with load noise while under a load, but that shouldn't be a big deal with the 5970.

Concealing the heat sink, fan and the rest of the graphics card is the recognizable Radeon HD 5870 design. AMD probably went with this setup because it helps protect the card very well. It is fairly similar to the housing that Nvidia has been using for a while now.

The 5970 is a beefy graphics card and requires a fair amount of energy. To power it, AMD added a 6-pin and 8-pin pair of PCI Express power connectors. Previous dual-GPU graphics cards have used the same setup. Dual 6-pin connectors have been the most common standard for single GPUs, but it seems the additional GPU requires a little more. It's important to know this so you make sure to get a compatible power supply.

Summary:

Plain and simply the ATI Radeon HD 5970 is a monster in the world of graphics cards. Even paired against Nvidia's GTX 480 it still has a commanding performance edge. The competition between these two parties has a history. They are continuously fighting for that top spot even though it is mostly a niche product that only enthusiasts go after. The battle between these two manufacturers is ongoing but it seems that the tides have turned in favor of AMD for the time being.

Screenshots:

Screenshots:

Graphics Cards: What to Look For

Friday 6 May 2011 08:52

Posted by _Blogger_

There is a lot to graphics cards, but we've managed to break them down and define the key aspects so you can find the one that works for you. They broke down into the following categories.

GPU/Interface

The graphics processing unit (GPU) is basically a second CPU but is designed to offload all the graphics rendering from the CPU and process it on the graphics card. Obviously, these are made to handle intense graphical workloads, which frees up your CPU to run all the other aspects of applications. What does that mean for you? Faster processing! The fastest processor on the market would bog down and overheat if it tried to process graphics in addition to everything else. The main thing you're looking for on the GPU is the clock speeds.

The graphics processing unit (GPU) is basically a second CPU but is designed to offload all the graphics rendering from the CPU and process it on the graphics card. Obviously, these are made to handle intense graphical workloads, which frees up your CPU to run all the other aspects of applications. What does that mean for you? Faster processing! The fastest processor on the market would bog down and overheat if it tried to process graphics in addition to everything else. The main thing you're looking for on the GPU is the clock speeds.

Video Memory

This criteria goes hand in hand with the GPU; you also want a fast clock speed. The amount of memory is also crucial because it is the GPU’s own RAM. Like your CPU needs RAM, the GPU needs its own video memory to reach higher speeds. Also, the larger the bus width and bandwidth, the more information can be processed simultaneously.

This criteria goes hand in hand with the GPU; you also want a fast clock speed. The amount of memory is also crucial because it is the GPU’s own RAM. Like your CPU needs RAM, the GPU needs its own video memory to reach higher speeds. Also, the larger the bus width and bandwidth, the more information can be processed simultaneously.

Display Interface

These are the connections that the graphics card uses to connect to a monitor. DVI, HDMI and DisplayPort all support the higher resolutions, with dual channel DVI as the most common. It is important to match up these connections with the ones your monitor has. If your monitor doesn’t have the same connections then you have to get a converter cable which may result in lower resolutions.

These are the connections that the graphics card uses to connect to a monitor. DVI, HDMI and DisplayPort all support the higher resolutions, with dual channel DVI as the most common. It is important to match up these connections with the ones your monitor has. If your monitor doesn’t have the same connections then you have to get a converter cable which may result in lower resolutions.

Supported API

Application Programming Interface (API) is the interface that enables interaction with software. Software that wants to use the GPU has to be compatible with the API of the card. For example, if your card only supports DirectX 9 and you buy a game or application that requires DirectX 11, you will have to upgrade your card in order to run it.

Application Programming Interface (API) is the interface that enables interaction with software. Software that wants to use the GPU has to be compatible with the API of the card. For example, if your card only supports DirectX 9 and you buy a game or application that requires DirectX 11, you will have to upgrade your card in order to run it.

Rendering Technologies

This criterion refers to the various technologies that are integrated in to the graphics cards. It’s hard to say which ones are better than others, because opinion-based prejudice runs rampant when it comes to graphics cards and which is the best. Truth be told, they’re all very similar and do basically the same things. Also, unless a game or application is built specifically with some of the technologies, they often don’t make a great deal of difference. Others do make a difference, however. Nvidia is known for their CUDA technology and it has been a thorn in the side of ATI for a while now. On the other end, ATI’s new Eyefinity technology looks pretty impressive when you stretch your desktop across 6 screens and maintain HD quality.

This criterion refers to the various technologies that are integrated in to the graphics cards. It’s hard to say which ones are better than others, because opinion-based prejudice runs rampant when it comes to graphics cards and which is the best. Truth be told, they’re all very similar and do basically the same things. Also, unless a game or application is built specifically with some of the technologies, they often don’t make a great deal of difference. Others do make a difference, however. Nvidia is known for their CUDA technology and it has been a thorn in the side of ATI for a while now. On the other end, ATI’s new Eyefinity technology looks pretty impressive when you stretch your desktop across 6 screens and maintain HD quality.

Additional Features

These are just a few extra goodies that aren’t extremely important and actually vary depending on the board manufacturer (ASUS, EVGA, Sapphire, etc). The information on our site is from the base models of each manufacturer. Typically, board manufacturers will have additional versions with multiple fans, liquid cooling and other variations.

If you put all of these things together, you get phenomenal graphics cards such as the ATI Radeon 5970, GeForce GTX 580 and GeForce GTX 480. Unfortunately most cards excel in one or two areas and fall short in another so there isn’t really a perfect one. We'll help you find the one that meets your expectations and fits in your budget. The better the card, the more it’ll cost, but the results can be well worth it.These are just a few extra goodies that aren’t extremely important and actually vary depending on the board manufacturer (ASUS, EVGA, Sapphire, etc). The information on our site is from the base models of each manufacturer. Typically, board manufacturers will have additional versions with multiple fans, liquid cooling and other variations.

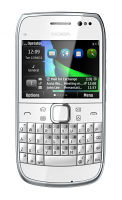

Nokia E6 hands-on: First look

Thursday 5 May 2011 22:36

Posted by _Blogger_

Introduction

The E6 is Nokia’s latest business offer and it has just landed in our office. It’s the company’s first smartphone running the new Symbian Anna OS out of the box. The E6 is a touch phone with a QWERTY keyboard and it even has a higher pixel density than the iPhone’s Retina display.It was a while since we’ve seen a high-end Nokia messenger. We are not trying to dismiss the previous E-series achievements, they’ve just became too conventional. Lucky for us the E6 brings a few interesting innovations to freshen up the lineup. It was about time!

The E6 is Nokia’s first handset with VGA resolution and also Nokia’s first QWERTY bar with a touchscreen. Its pixel density is even higher than the Apple’s Retina display. The E6 doesn’t stop here though – it packs the same camera module as most of its Symabian^3 siblings – an 8 megapixel fixed-focus sensor capable of HD video recording. Add the new Symbian^3 update – Anna – and we might have a potent business or messaging phone if not else.

Nokia E6 at a glance:

- General: GSM 850/900/1800/1900 MHz, UMTS 850/900/1700/1900/2100 MHz, HSDPA 10.2 Mbps, HSUPA 2 Mbps

- Form factor: Touchscreen bar

- Dimensions: 115.5 x 59 x 10.5 mm, 87 cc; 133 g

- Display: 2.46-inch 16M-color VGA TFT LCD capacitive touchscreen

- Memory: 8GB storage memory, 1GB ROM, hot-swappable microSD card slot (up to 32GB)

- OS: Symbian Anna OS

- CPU: ARM 1176 680 MHz processor, Broadcom BCM2727 GPU; 256 MB RAM

- Camera: 8 megapixel fixed-focus camera with dual-LED flash, geo-tagging; 720p video recording@25fps

- Connectivity: Wi-Fi 802.11 b/g/n, Bluetooth v3.0 with A2DP, microUSB port with USB on-the-go, 3.5mm audio jack, GPS receiver with A-GPS, HDMI port

- Misc: Accelerometer, Stereo FM radio with RDS, Flash support in the web browser, proximity sensor, Battery: 1500 mAh Li-Ion battery

Nokia also put some work on the design and the E6 turned out to be quite a looker manufactured with premium materials. Jump on the next page to explore more about that.

Subscribe to:

Posts (Atom)